- Overview

-

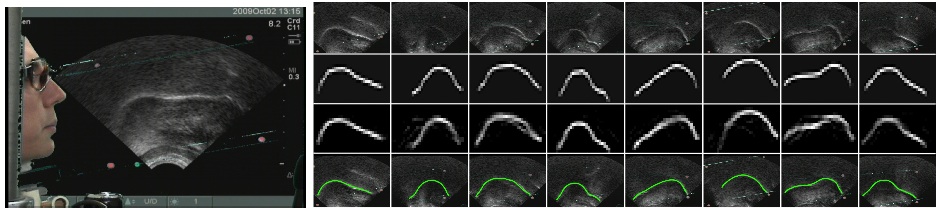

This research concerns the computational tools we build with the explicit intent of understanding human social behavior. In one project, we perform automatic analysis of ultrasound data of the human tongue during speech. In another project, we perform automatic analysis of human facial expressions to make predictions about a variety of behaviors, such as whether or not a person is showing true or fake pain, or true or fake joy, determining whether or not they understand a lecture, or determining if they are about to fall asleep while driving.

The machine perception primitives we develop are useful in two ways. First, they enable analysis of massive amounts of human behavioral data that were previously unheard of among psychologists. For instance, through analysis of several hours of subjects either faking or truly experiencing their arm being submerged in ice water, our group determined that certain facial action units that had not previously been predicted by psychologists were highly predictive of true vs. false pain. In addition, the machine perception algorithms themselves can help understand how humans process this data. For instance, in the ultrasound speech study, we are testing motor theories of speech understanding in humans by determining if and how information about the articulatory apparatus is used in machine learning-based speech recognition algorithms. Finally, we are performing studies of humans interacting with robots in order to understand how to build robots that can interact better with people, largely by studying how humans change their behaviors and how they respond to different perceptual features when interacting with "intelligent" appearing machines.

- People

-

FacultyDiana Archangeli (Linguistics)Graduate StudentsJeff Berry (Linguistics)Filippo Rossi (Psychology)Undergraduate Students

- Publications

-

Marian Stewart Bartlett, Gwen Littlewort, Claudia Lainscsek, Ian Fasel and J. Movellan. "Recognition of facial actions in spontaneous expressions", Journal of Multimedia, pages 22-35, 2006.Ian R Fasel, Kurt D Bollacker and Joydeep Ghosh. "Neural network based classification and real-time biofeedback system for clarinet tone-quality improvement", 12th International Joint Conference on Neural Networks, 1999.Ian Fasel, Michael Quinlan, and Peter Stone. "A Task Specification Language for Bootstrap Learning". In AAAI Spring 2009 Symposium on Agents that Learn from Human Teachers, March 2009.Ian Fasel, Masahiro Shiomi, Pilippe-Emmanuel Chadutaud, Takayuki Kanda, Norihiro Hagita and Hiroshi Ishiguro. "Multi-Modal Features for Real-Time Detection of Human-Robot Interaction Categories", ICMI-MLMI, Cambridge, MA 2009.Paul Ruvolo, Ian Fasel and Javier R. Movellan. "Learning Approach to Hierarchical Feature Selection and Aggregation for Audio Classification". Pattern Recognition Letters (in press).Jacob Whitehill, Gwen Littlewort, Ian Fasel, Marian Bartlett and Javier R. Movellan. "Toward Practical Smile Detection," IEEE Transactions on Pattern Analysis and Machine Intelligence. 2009;31(11):2106-11.

Webmaster: Andrey Kvochko